Projects

I enjoy developing data science workflows for research projects and non-governmental/non-profit organizations. If you have a specific use case or simply want to discuss an idea, drop me a line!

Open Knowledge Maps

A visual interface to the world's scientific knowledge

Open Knowledge Maps are creating a visual interface to the world's scientific knowledge that can be used by anyone in order to dramatically improve the discoverability of research results.

At Open Knowledge Maps I work on the algorithms that cluster and summarize the search results.

Try it out!Embedbot

Related terms to cat: tgg, ggg, ggc, ttg, gac

Embedbot is the result of playing around with Apache Spark, word2vec and the Twitter API.

Embedbot replies to queries like "climate + change" with word embeddings generated on the EuropePMC Open Access corpus of 1.4 mio scientific papers.

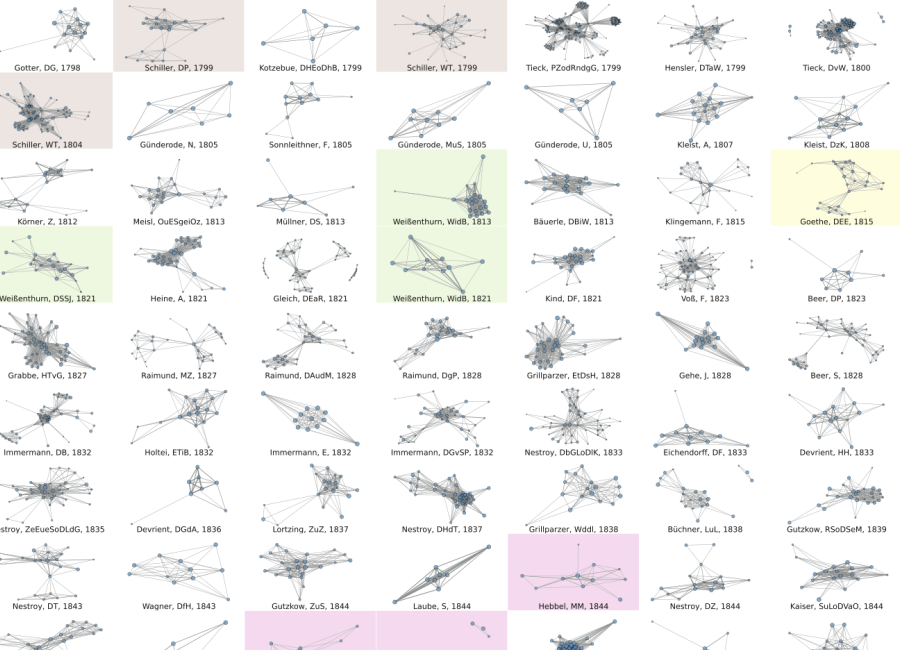

Ask it something!dlina

A workflow for a Digital Literary Network Analysis

Working group members are Frank Fischer, Mathias Göbel, Dario Kampkaspar, Hanna-Lena Meiners, Danil Skorinkin, and Peer Trilcke. We're looking into hundreds of dramatic texts ranging from Greek tragedies to 20th-century plays and work on larger German, French, English, and Russian corpora.

In dlina I work on a tool for network visualizations and network metrics.

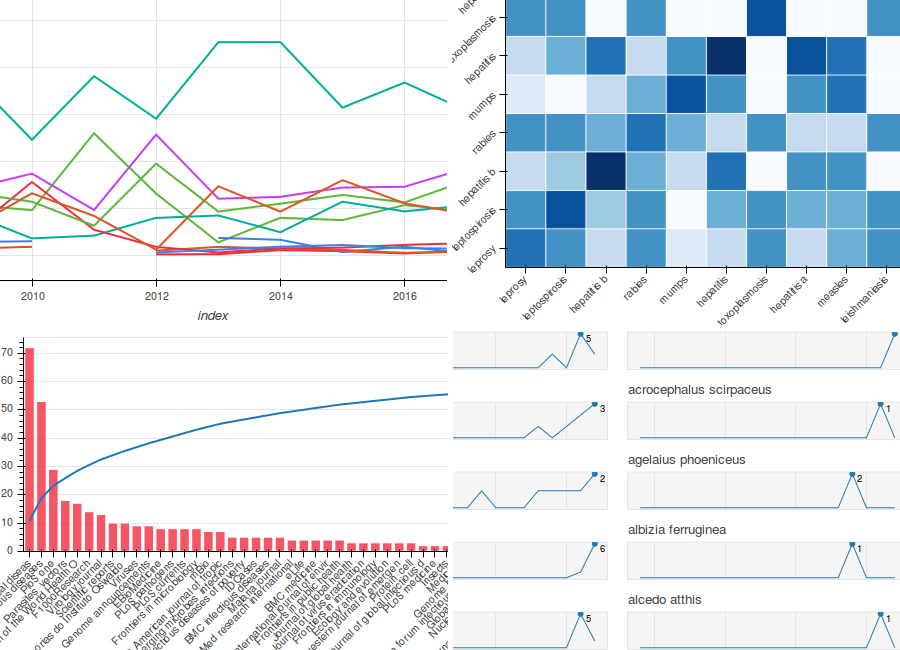

ContentMine

The Right To Read Is The Right To Mine

ContentMine develops open source software for mining the scientific literature and engage directly in supporting researchers to use mining, saving valuable time and opening up new research avenues.

At ContentMine I was responsible for downstream data analysis and visualizations for demonstration purposes.

Click here to learn more. Try a demo here!